ERP| What is ERP?|Benefits of ERP.

What is ERP?

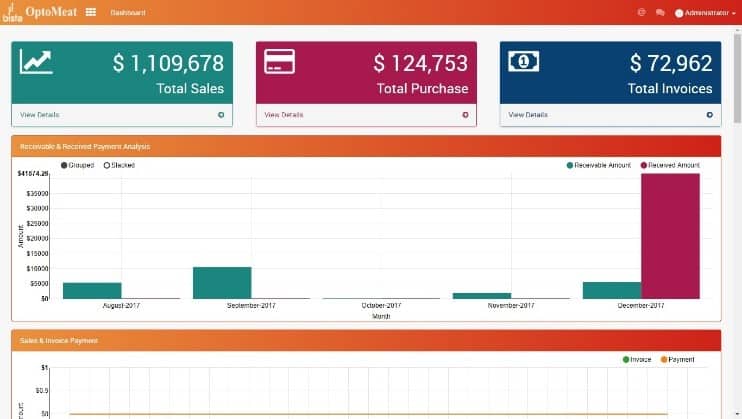

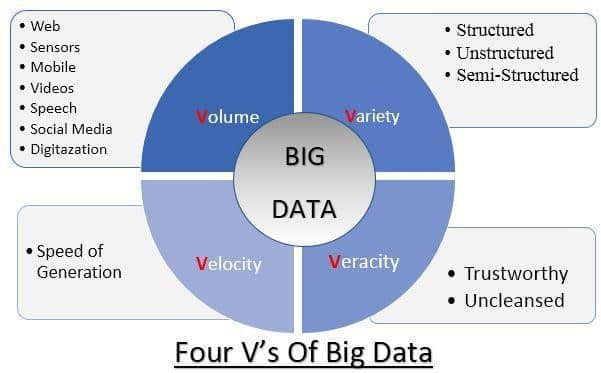

ERP, or Enterprise Resource Planning, is software that centralizes business information into a single platform. This reduces the complexity of businesses with multiple departments that often rely on separate software for their different needs. ERP provides a business with a single top-down overview along with the ability to make insights from all the combined data.

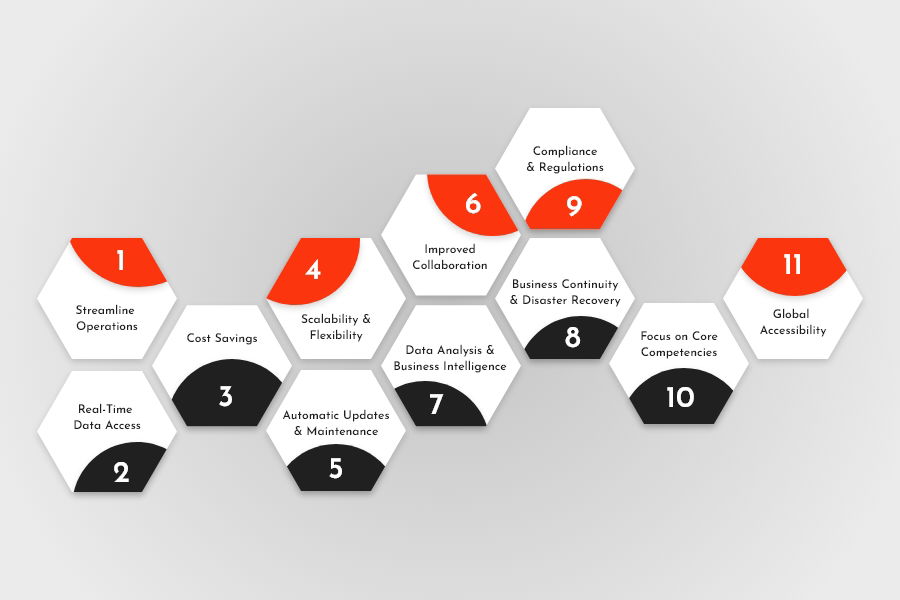

A summary of the benefits of implementing ERP include:

- Lowered costs

- Streamlined business processes

- Real-time overview of the entire business

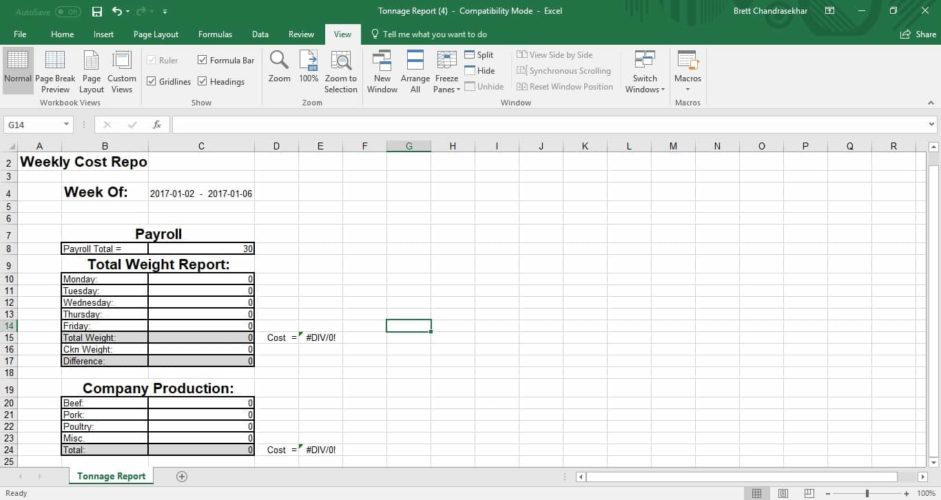

- Business insights based on data

- Fewer data contradictions between departments

- Enhanced labor productivity

- Increased ease in complying with regulations

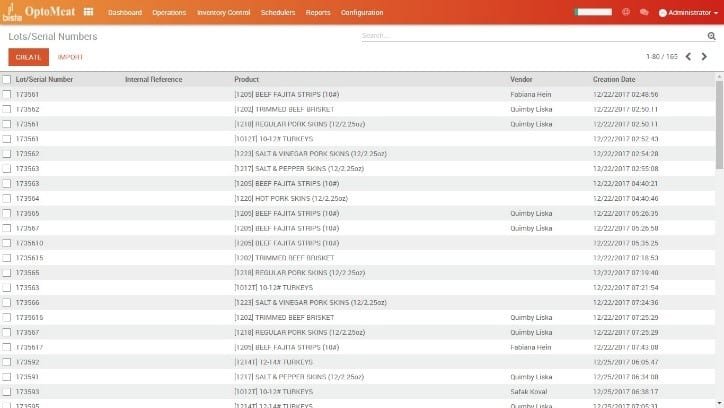

ERP software typically contains several different modules, such as CRM, sales, human resources, accounting, distribution, and production. Employees in different departments have access to different parts of it, depending on their role. Data thus entered by each segment can seamlessly transfer to other parts of the business.

Nowadays, many companies are implementing cloud ERP systems rather than on-premise systems. It offers the same benefits as on-premise systems but also lowers the barrier to entry. With cloud systems, companies don’t have to purchase/manage the servers and IT staff required to host their own system. The ERP provider handles this instead, allowing companies to implement it more quickly and at less cost. Other benefits include scalability and geographic mobility (since accessed through the internet), meaning global companies can be connected through one rather than having multiple.

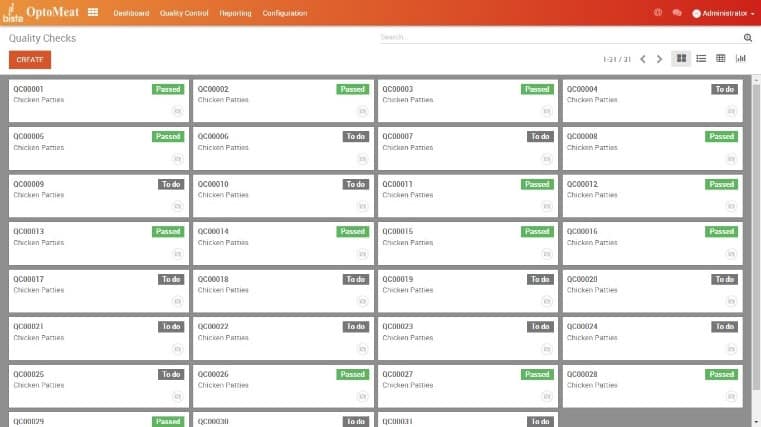

Common examples of ERP cloud software include Odoo, NetSuite one of the world’s best business ERP software suites, and Odoo, a popular open-source platform. Some companies also create industry-specific software for industries with unique requirements. For example, Bista Solutions offers customized ERP software for the meat processing industries (OptoMeat) and automotive industries (Turbo).

Despite its many benefits, implementing ERP can be a costly and time-consuming task. Before starting a project, organizations need to have a clear understanding of their goals and what they want to get out of their new system. They also need to partner with a reliable ERP implementation firm to make sure it fits their needs. It might have been a problem in the past to identify the right ERP partner for your organization. Today it became very easy, all you have to do is identify the best Partner and see what they have achieved in your industry, and what Implementations they have done.

Bista Solutions is a firm that specializes in ERP implementation and has partnered with companies across multiple market segments, including the Manufacturing, eCommerce, automotive industry, healthcare, manufacturing, retail, services, telecommunications, and more. If you’re interested in implementing Odoo have any questions, Contact Us

Benefits of Cloud ERP Implementation for Your Business

Benefits of Cloud ERP Implementation for Your Business

Instant infrastructure

Instant infrastructure