Odoo Commission Tracker for Field Employees

Odoo Commission Tracker | Odoo Sales People Commission Tracker.

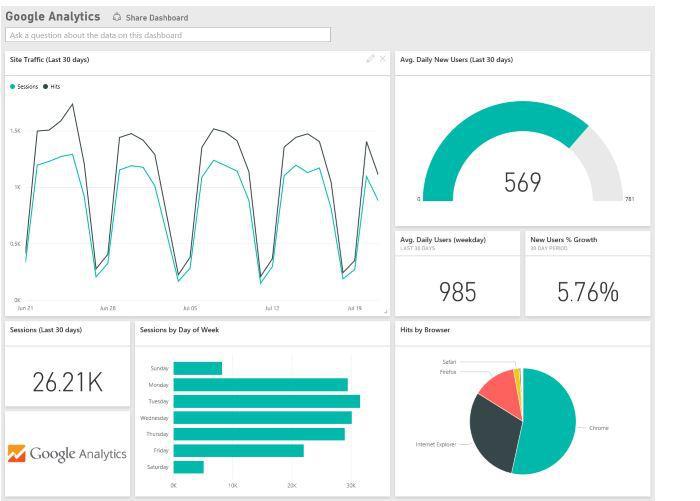

Odoo Commission Tracker is an Excellent Feature developed by Bista Solutions for one of our US Clients to track the Daily Sales Performance of the Employees and also their previous Sales Performances as well.

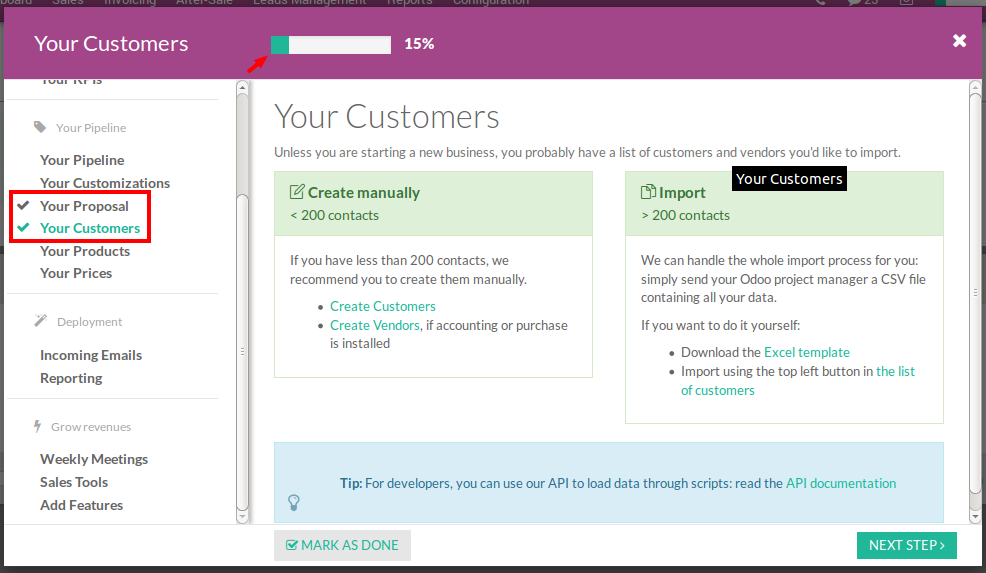

Traditionally, Field Employees didn’t have any source to Track their Performances on a Daily basis as well as where they stand on LeaderBoard Rankings based on their Performances, and this led to confusion and indirectly less Motivation in their Point of View.

Odoo Commission tracker is a Performance Booster to view their Performances ttoDate within fa ew seconds. Tracking becomes a lot easier to them wconcerningtheir Performances instead of time and again checking their Performances at Reporting Level.

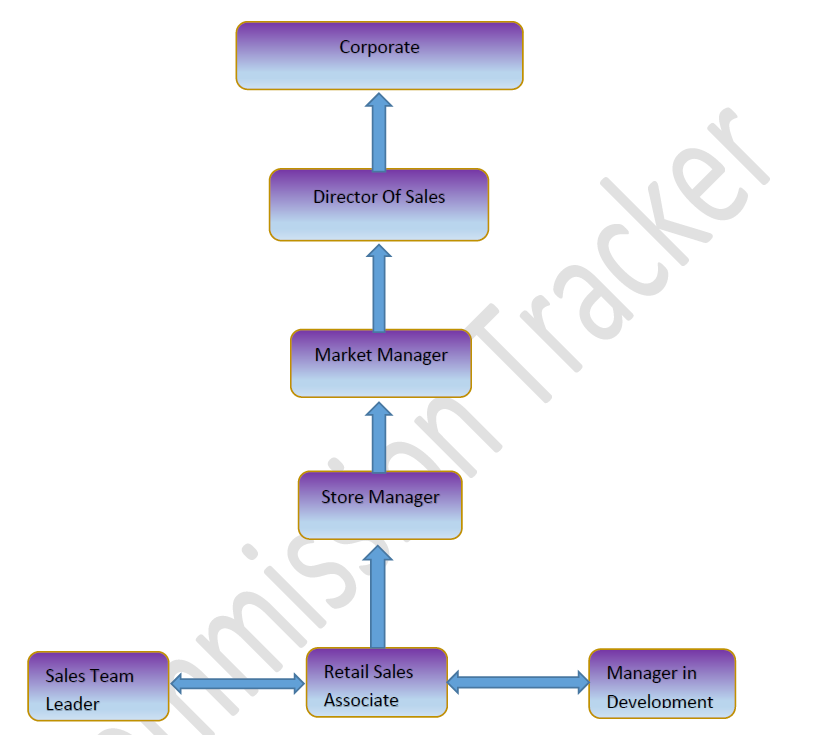

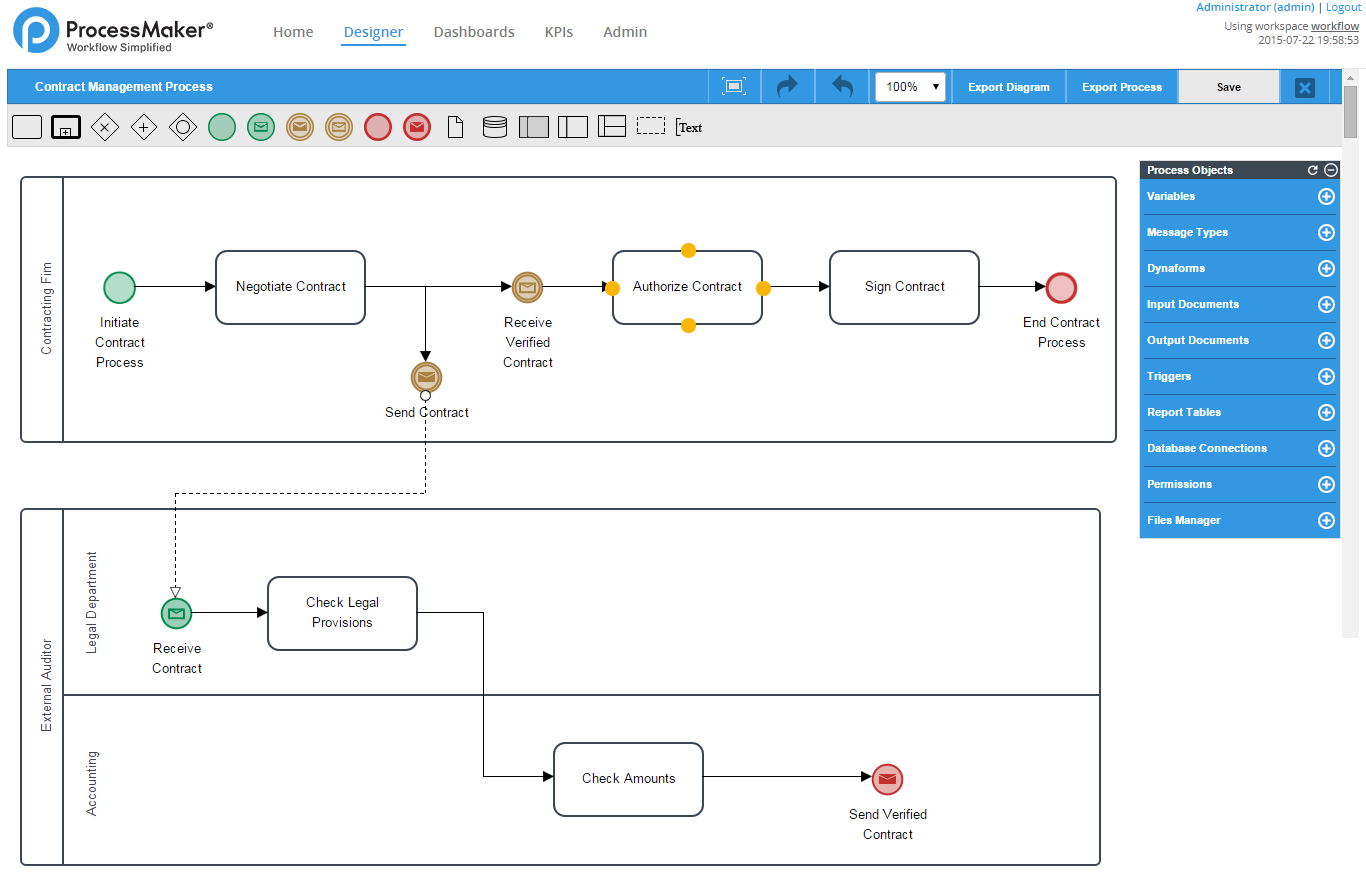

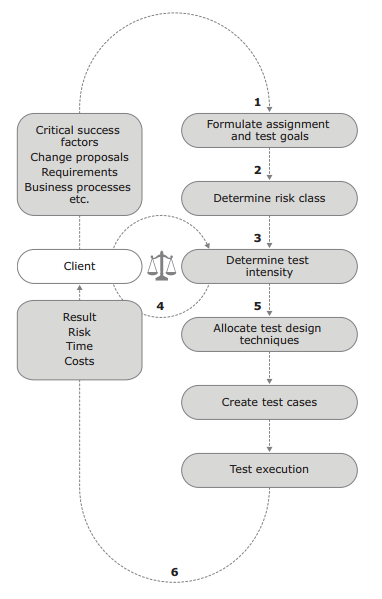

Given an Example of an Organizational Hierarchy for the Sales Department.

Here’s aaHierarchical Diagram which can pepresent Sales Department in a Telecom Business.

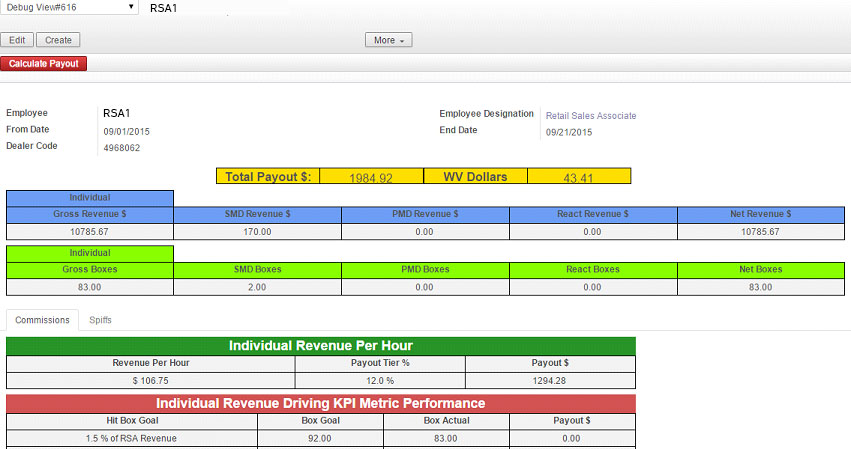

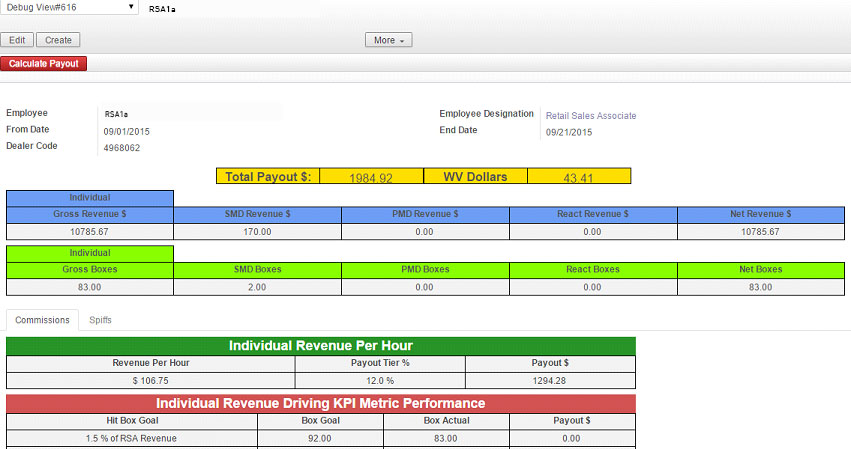

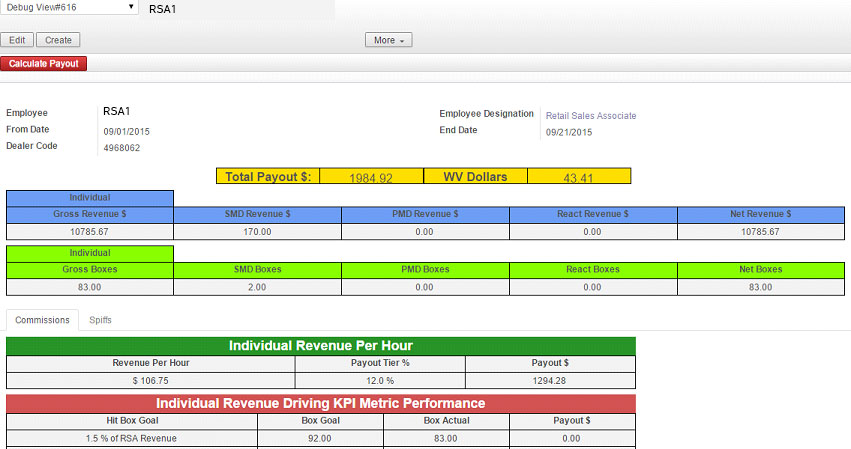

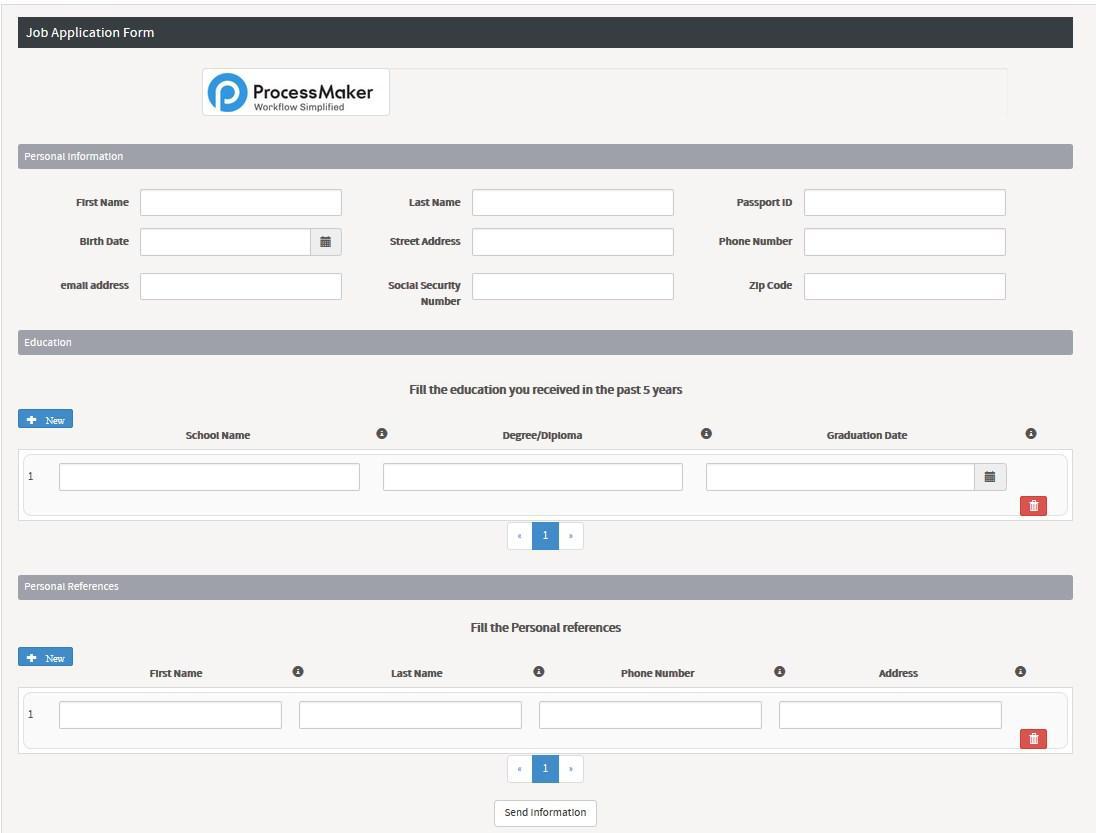

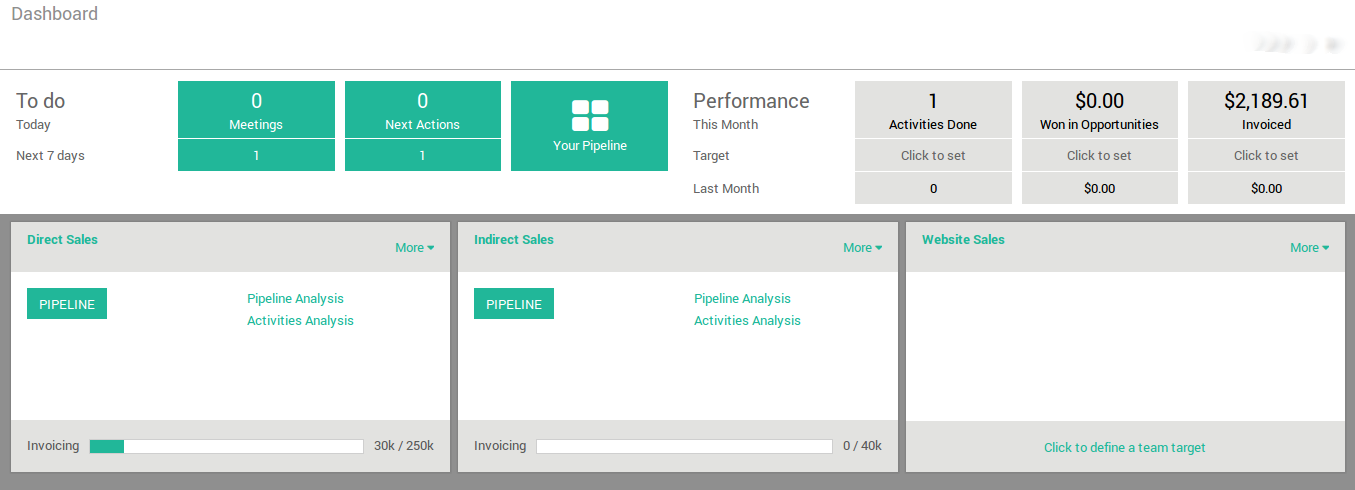

Below Design represents CT for the Job Position of Retail Sales Associate(RSA) which stands at the bottom of an Organization but does the most Important Job of all.

This shows the Performance of the RSA on different Parameters based on the Plans offered by the Telecom Giant and how much Commission/Payout he is making out from Month to date.

Features:-

-

CT provides excellent Advantages over Other Commission Apps when it comes to its Dynamic Nature and automatic adjustment to different Hierarchies within an Organization.

-

CT is tynamic based on the Person who is Logged into Sthe ystem and his Position in the Company accordingly specific design of CT is assigned to him and he can run CCTsof himself and the Employees falling under his Position in the Company.

-

CT Features assembles the Design as well as Calculation Logic as per the Payout structure of an Organization which can suit any Organization from Retail to Telecom Business.

-

The only Configuration from a System Admin point of view one has to do is to set up the Commission Structure for all of its Employees based on either htheirJob Position or his Department or his Role in an Organization and rest leave it up to CT to handle.

-

Commission Structure can be set up Monthly or Quarterly depending upon how an Organization plans its P & L calculation.

-

CT can be run Monthly or Quarterly to check the Performance of an Employee, Store,Market, or Region.

Different Parameters can be considered in a CT based on the types of Business and its Products or Services which can IIncorporateeasily in a single Form.

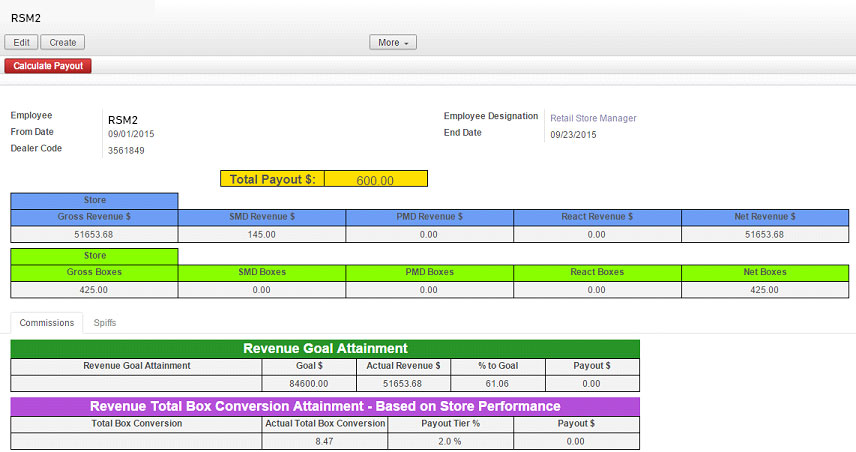

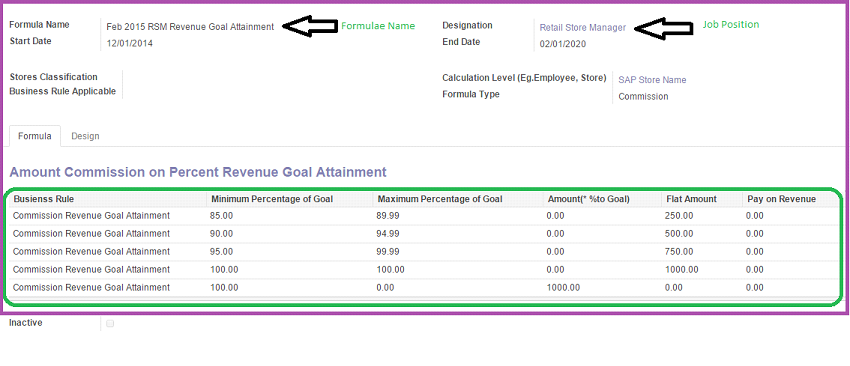

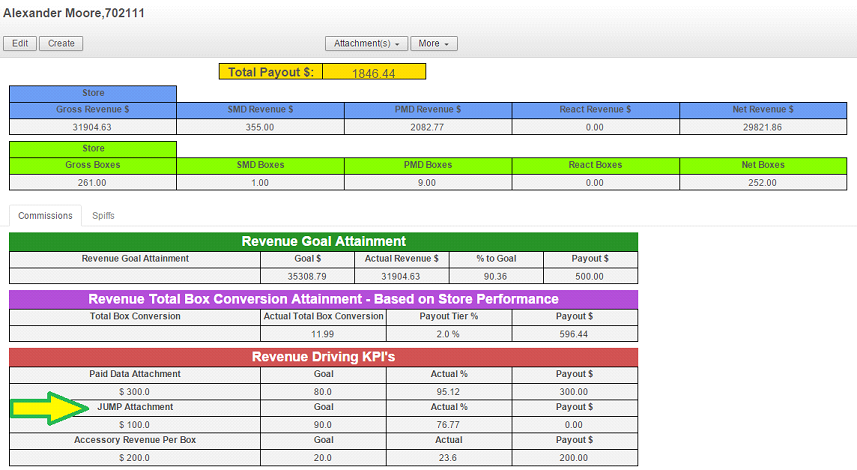

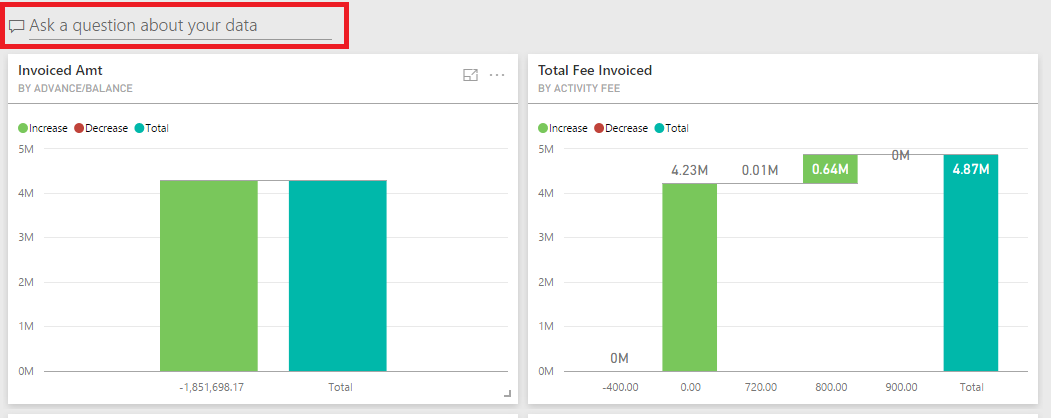

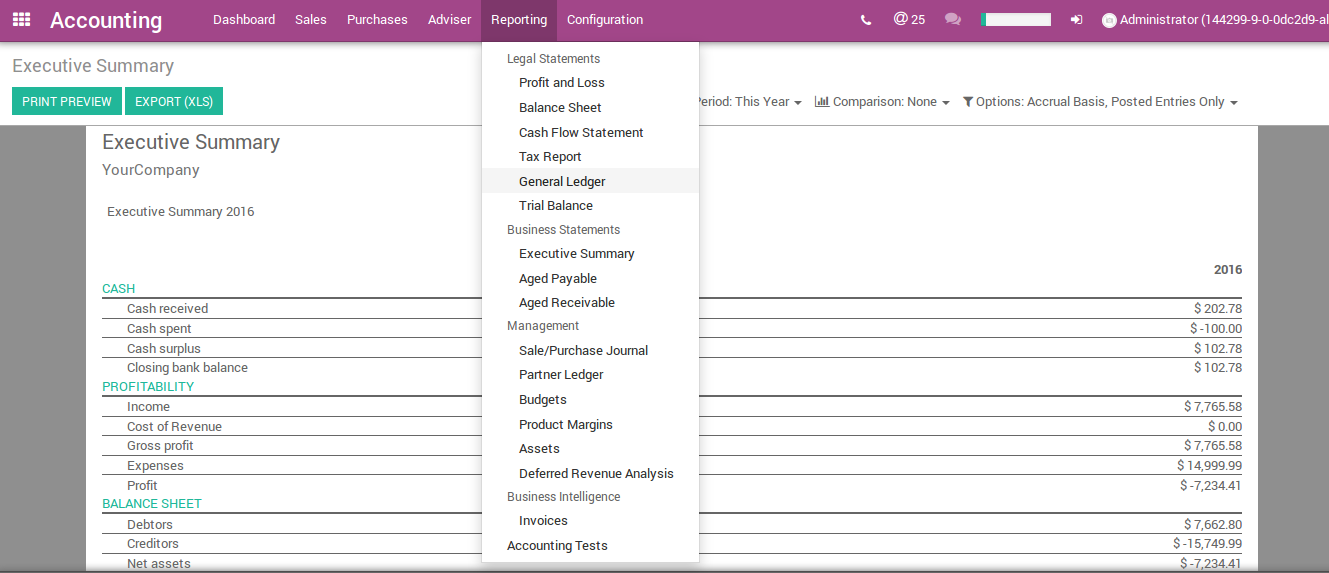

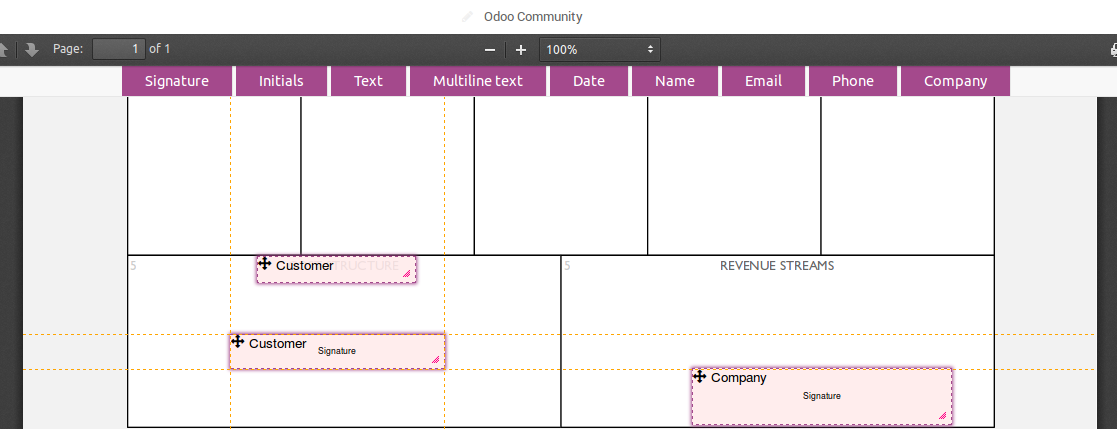

The screenshot below shows you an example of Commission Parameters for a Telecom Business and their Payout Structure for a Retail Store Manager(RSM) and how simple it is to set the commission structure for one of the parameters(Revenue Goal Attainment) of RSM Payouts.

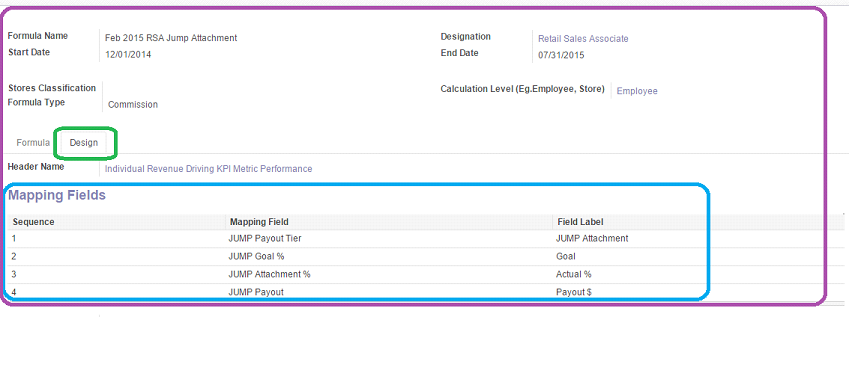

With a Simple Configuration as designed above Sales/Commissions Department Head can easily set up targets for different Job Positions within his Department in ma atter of Minutes and ends up setting up the Entire Payout Structure for the Field Employees within half an hour either on Monthly Basis or Quarterly Basis.Setting u p this formula not only sets up the structure but also sets up the Design for this parameter in the Commission Tracker see the screenshots below.

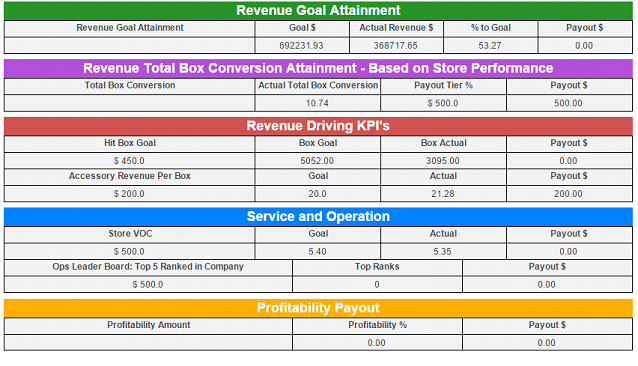

The result is as see you below for an Insurance Parameter for Phones in commercial terms “JUMP”.

JUMP Attachment specifies Sales Reps Percentage on Selling insurance on the purchase of Phones. The yellow arrow suggests the performance of a Store as well as The tore Manager on selling insurance Phones and their Payouts.

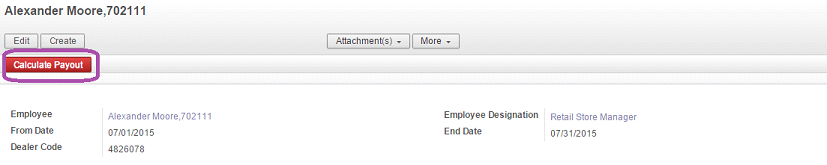

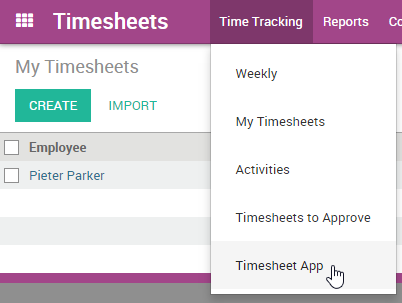

With a single click of the ba button, whether it’s a Sales Rep or Store Manager or Market Manager or Region Director can see their Payouts for the current Month or months Months with the Live Operational Data as the entries are going on in the store and this is just with a single Button button per the image showcased in the next page.

See the screen below, this is what an employee has to do to view his Commission for the specified month and his total performance appears before him within a matter of seconds as shown in the above screenshots.

Conclusion:-

Normally we search for apps that look far fancier but end up slowing down the system to a considerable extent with a single process, in current Business world TIME is what matters especially for Sales Department since these guys are mostly on the field and need more time to interact with Possible Opportunities rather than spending time on Software applications.

Hence, Bista’s CT provides an easy and better platform to check Sales Performances at any given point ointime without waiting for Hefty Reports to load and show their Performances.